Just last year, I had completed my undergraduate journey at UCL with a First Class Honors BSc in Applied Medical Sciences. As part of my degree, I got the opportunity to pursue a 9-month-long research project culminating in a dissertation. My project focused on using deep learning to automate the Cardiac MR image planning protocol.

An Introduction to Cardiac MRI

Cardiac magnetic resonance imaging (MRI) is used for visualizing the heart and evaluating various types of heart diseases like cardiomyopathy, ischemic heart disease, valvular defects and pericardial defects. Typically, what happens in the CMR protocol is the patient is passed through a huge barrel-shaped scanning machine and on the other side, technicians acquire images of the heart.

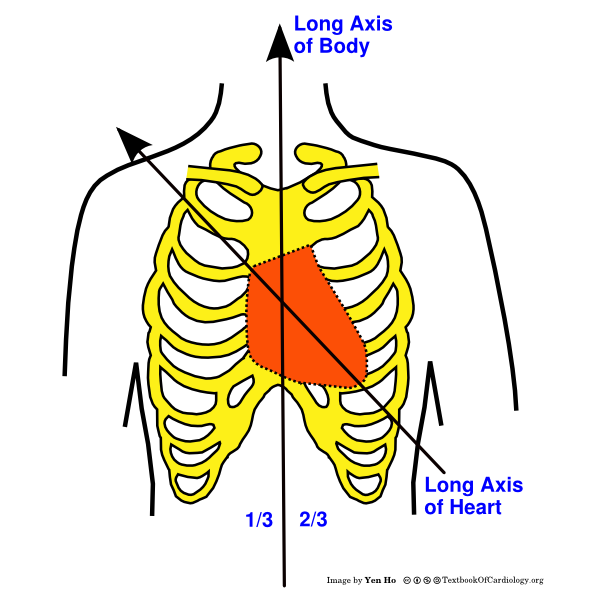

Delving a bit deeper into the imaging protocol, the first step is to acquire images in the standard anatomical views – which are the sagittal, transverse and coronal views, shown below.

How are imaging views planned?

This a step-by-step protocol outlined in detail in cardiac MRI textbooks. Basically, the radiographer takes an initial heart image (preferably an image of the standard transverse view) and then prescribes lines on it. Using these lines as a guide, the MRI machine, “slices” the image to create the subsequent image view.

There are many possible views that can be constructed in the CMR protocol, however two imaging views are mandatory for the radiographer to capture – these are the four-chamber (4Ch) view and the two-chamber (2Ch) views. They are named so because they visualize the different chambers of the heart.

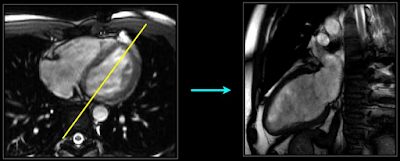

In a 4Ch view, all 4 chambers of the heart are visible and in the 2Ch view, only two are visible. To create the 4Ch view, the radiographer first prescribes a line on the transverse image, and then on the 4Ch view image, he/she prescribes another line passing through the left side of the heart (through the mitral valve and ventricular apex), slicing the image to create the 2Ch view. The workflow below shows the 4Ch view on the left and how a line is drawn (shown in yellow) to slice the image and create the 2Ch view on the right.

Disadvantages of the protocol

The current cardiac MRI protocol has disadvantages. Firstly, it is time-consuming, taking at least an hour per patient. Furthermore, images need to be sliced very carefully so that the cardiac features are shown correctly and the doctor can make an accurate diagnosis. This requires competent radiographers who are not available in all hospitals. Also, the process is manual which means there is a high scope for error. For these reasons, it would be beneficial if this protocol is automated. To perform this automation, deep learning would be useful. So in this project, I trained neural networks to input a 4Ch cardiac image and predict the planning line for the 2Ch image view. This hasn’t been studied much previously and because this project is just a proof-of-concept, I only focused on the 4Ch and 2Ch views, however it can be extended to more views like 3Ch and 5Ch.

My Approach to Planning Line prediction

Segmentation

As you can see, there are three distinct elements in the image – the dog, the chair and the background – let’s label them as ‘class 1’, ‘class 2’ and ‘class 3’ respectively. How do we convert the original image on the left to the segmented image on the right? This is done by mapping every pixel on the original image to one of the three classes. So the pixels on the corners of the image belong to the background and will be assigned to class 3, the pixels that make up the dog will be in class 1 and the pixels for the chair will be class 2. When we view the image, we can color-code it so that pixels in classes 1, 2 and 3 will have the colors yellow, blue and red respectively. This is how we create the segmented image, also called the mask.

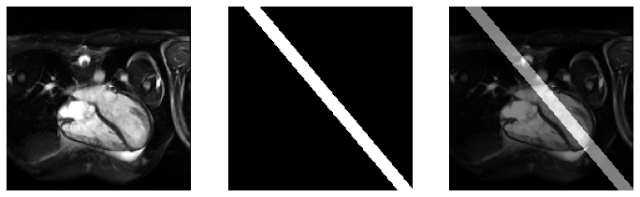

This segmentation task can be performed for the 2Ch line prediction case. In the 4Ch cardiac image, pixels could be classified as belonging to one of two classes – the 2Ch line or the background. Using a supervised learning approach, I trained a convolutional neural network called the “UNet” to predict a mask for the 2Ch view and using this mask, I reconstructed the predicted 2Ch line.

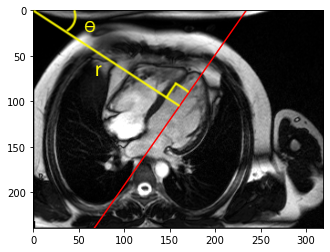

Parameter estimation

Training the models

My Results

Overall, I found that the semantic segmentation approach was much more effective than the parameter estimation approach. You can see this in the sample test images below for each type of model. The expected 2Ch line is shown in red while the blue line is the predicted 2Ch line from the neural network.

You can see that the 2Ch line predicted by the segmentation model is pretty close to the true line. I validated this by also calculating the distance between the true and predicted 2Ch lines from each model. For the segmentation model, the average distance between the lines was 5.7mm while it was 30.2mm for the parameter estimation model.

The reason why the segmentation approach worked better was probably because the UNet has a complex autoencoder architecture which can learn the key anatomical landmarks on the 4Ch cardiac images, which is required for constructing the 2Ch line. The parameter estimation model, however, wasn’t able to learn this due to a less complex architecture, hence couldn’t perform well.

Reflecting on my experience

The results aren’t perfect and there is still room for improvement. But overall, this has been a challenging and fun project for me. Reflecting on my experience, I would say that this project actually gave me the feel of what real deep learning work involves. Prior to this project, I did pursue online courses where I could do code assignments, however these assignments mostly involved filling in code which others have already written. On the other hand, this project gave me the opportunity to tackle a real problem, experience the process of writing and debugging full programs and most importantly, clean and process raw data, which is what practitioners actually spend 90% of their time on! Alongside this, I was able to learn more about cardiac imaging and MRI acquisition from experts at Barts Cardiac Imaging Centre.

With this project, my passion for working in the medical AI field has grown. I’m excited about the opportunity to learn more about deep learning during my Machine Learning masters course, interact with experts in the field and participate in more awesome projects!

This project was executed on Google Colab, a cloud-based Jupyter Notebook service provided by Google. Do check out the Colab notebooks on my GitHub page for the source code. My final thesis report is also accessible in this repository.