Overcoming Data Scarcity

Compared to fields like genomics and bioinformatics, where high-throughput sequencing has led to an abundance of biological datasets, clinical imaging datasets are relatively data scarce. Some common reasons for this are:

- Cost – It’s really expensive to acquire these images.

- Annotation – For training computer-aided diagnostic (CAD) algorithms, one needs a large annotated dataset. This requires the assistance of clinicians, who can sit down and label the millions of data samples, which is, of course, challenging.

- Privacy – Medical imaging data is proprietary, so data privacy and ethics concerns make it difficult to access some datasets.

- Rare diseases – with diseases not as common in populations, the datasets available to work with are either limited or greatly imbalanced. Working on a deep learning project related to inherited retinal diseases, this is one issue that I have noticed. Downstream, this can make CAD algorithms unreliable as the model may not be as accurate on some diseases compared to others.

GANs can be really beneficial to tackle these problems as they can synthesize new images that look similar to images from the real data distribution. Thus, a trained data generator can augment existing datasets, improve diagnostic models’ generalizability, and address domain shift in ML (domain shift is the idea that the training data and unseen test data come from very different distributions). Furthermore, the images are not real, so they aren’t tied to a specific patient, making it not as much a worry to use. Broadly, image synthesis can be classified into three types:

1. Unconditional synthesis

Unconditional synthesis is just random image generation, where the GAN is given a latent input and outputs an image sample from the learned data distribution. This is fairly easy to accomplish, as all one needs is an unlabelled dataset, which is not too hard to obtain. SOTA architectures like the DCGAN, WGAN, MSG-GAN, and StyleGAN have been successful for unconditional generation. Some examples of recent studies include the work by Frid-Adar et al. in 2018, where DCGANs were used to generate different liver lesions separately. Using their synthetic data in a deep learning classifier, they found a jump in model sensitivity and specificity from 78.6% and 88.4% to 85.7% and 92.4%.

Another example is synthesizing images of lung nodules. One of the challenges in lung cancer diagnosis is classifying a nodule as benign or metastatic. CAD systems can play an augmentative role for radiologists; however, the issue is again dataset size! A study by Chuquicusma, Hussein, and Bagci used DCGANs to generate lung nodules, which they then presented to two radiologists to classify as real/fake in multiple visual experiments. They successfully convinced one of the radiologists that the generated nodules were real in 67% of the visual tests. The second radiologist, who was less experienced, was convinced that the generated nodules were real in all tests. A second benefit I see through approaches like these is that GANs not only can make diagnostic algorithms better but also doctors, as these images can play an educational role.

2. Conditional Synthesis

3. Cross-modality Synthesis

Cross-modality synthesis is similar to pix2pix in that the GAN model can input an image in one style and output another. The only difference is that images are unpaired or not related to each other. What does this mean? Well, if you see the retinal images in the previous section, the second row is the blood vessel tree extracted using the real images in the first row, so the images are related to each other. What if I instead have images in one style and a completely different set of images in another style? This can be used for unpaired image-to-image translation.

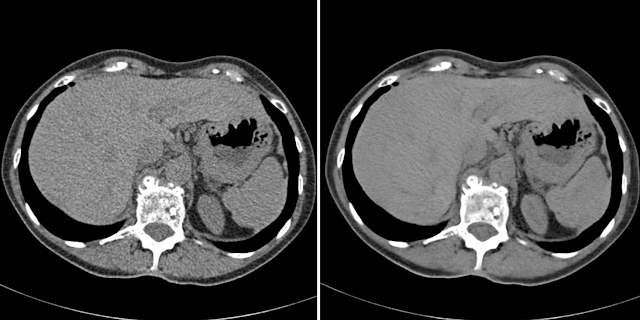

The Cycle-GAN was developed for unpaired translation. This model has two generators and two discriminators, so it’s a much larger architecture. For example, a study by Wolternick in 2017 used Cycle-GAN to convert an MRI image into a CT scan, and they got the following result. The generated and real CT is remarkably similar!

Imagine how beneficial this would be! CT scans generally require ionizing radiation to create the image, which isn’t good for the patient. Cross-modality synthesis can reduce the need for a patient to go through a CT scan and reduce the costs of acquiring more images. The big challenge with this solution, however, would be translating it into the clinical workflow. This is a matter of spending more time with doctors to ensure that the generated images are anatomically correct and convincing radiologists that they can rely on these technologies to analyze and make conclusions about a patient’s health. This is something I find quite exciting about machine learning – the fact that it brings together researchers and experts from multiple domains to tackle novel problems.

Improving the quality of our current data

Data cleaning is a massive part of a machine learning project workflow. With imaging data, researchers typically analyze the image pixel intensity values, image sizes or use some overall quality metrics based on which they can decide to retain or omit samples. Losing data can hamper the performance of algorithms as we have smaller datasets to work with, and our models might not generalize well to new data. In these kinds of situations, the general thought is, “why don’t we just go and collect some more data?”. You can, but it’s a lot of work. Wouldn’t it be nice if we could try to improve existing data quality instead? I think so.

Andrew Ng, who is a role model for me, describes this idea as data-centric AI. With the progress that machine learning is undergoing today, we are already assured of having great algorithms that can learn complex tasks. But these algorithms are only as good as the data you feed in. With that in mind, let’s now discuss some of the applications of GANs in improving image quality.

Image Denoising

Image denoising is basically a way of getting rid of noise and artifacts from images. This can occur based on how the technician sets up the imaging system and other patient-related factors.

We can see the value of GANs for image denoising in a study by Xin Yi and Paul Babyn. Here, they focused on low-dose CT scans, a type of CT imaging where the amount of radiation is restricted. It is beneficial for patients because they aren’t overexposed to radiation; however, the downside is that images can be quite blurry and hard to analyze. To address this, the study used conditional “sharpness-aware” GANs where the input is the original noisy CT and the output is denoised CT. This model is quite different from a typical GAN because, along with a generator and discriminator, it uses a sharpness detector network that compares the ground truth map with the generated map to force the images to become sharper in low contrast regions. Their experiments found that the denoised images were of a quality that is similar to SOTA methods.

Image Reconstruction

When conducting an imaging study, patient movement or the radiation dose can also disrupt the image quality. In this scenario, GANs can be used to reconstruct the images by performing superresolution – enhancing the resolution to highlight features that are not clearly visible in the original image. A study by Kim et al. in 2018 used a GAN for performing super-resolution of images. The goal was to input a low-resolution MR image in one contrast and generate a higher-resolution MR image in another contrast. This could potentially accelerate the MRI scanning process, benefitting patients.

Image reconstruction can also be performed to sharpen specific features in an image. A study by Mahapatra in 2017 implemented a loss function to reweight image pixels based on their relative importance. This can be useful in situations where a doctor wants to visualize the blood vessels or lesions in the image better than other features.

Modelling Disease Progression

Moving beyond just image generation and image processing, this is one application of GANs that I would love to explore further in the future. One of the beauties of generative modeling is that it is unsupervised learning. This means that models can build an understanding of the data from the ground up and probably pick up on some features that we may not have seen.

If you recall, in a previous post, I discussed controllable generation. This is one of the aspects of image synthesis where one can modify the latent input vector to create a different output. Controllable generation can be extremely beneficial for understanding how anatomical or physiological features change at various stages of disease – also called disease progression modeling. It can also be a valuable tool for doctors to make clinical decisions, determine how a particular covariate can affect the image appearance, providing a patient with some motivation by showing a personalized forecast with/without behavioral modifications, and finally, studying the effects of drug trials by comparing the real progression with a predicted progression.

Alzheimer’s Disease is one such condition where disease progression studies are largely conducted. A study in 2018 used GANs to model changes in AD. The researchers first trained a GAN to produce images of healthy and AD-affected brains at different stages. Using an encoder network, they then tried to learn a latent space for different stages of AD by comparing generated images from the latent vector to the actual images of that disease stage. Finally, they measured differences between the latent vectors to identify which components were strongly associated with particular stages of AD. Adding or subtracting these components from a new latent vector, they were able to add/subtract AD features from the image. Generally, when we think of image analysis, we think diagnostics – I think studies like this could help gain insights about disease itself, which is exciting.

So that covers most of the interesting work happening at the intersection of GANs and medical imaging. Overall, I’m really excited about what’s more to come, and I hope that I can also be a part of this amazing field in the future.

Links:

Generative Adversarial Networks in Medical Imaging: A Review

Modelling the progression of Alzheimer’s disease in MRI using generative adversarial networks

Sharpness-aware Low dose CT denoising using conditional generative adversarial network

Synthetic data augmentation using GAN for improved liver lesion classification