Listen (7 mins) or read (5 mins)

Whether it’s non-existent problems, unscalable solutions or a lack of imagination, we need to be careful about what educational technology appears to promise.

I have written before about how easy it is to get dazzled by shiny tech things and, most dangerously, thinking that those shiny things will herald an educational sea change. More often than not they don’t. Or if they do, it’s nowhere near the pace often predicted. It is remarkable to look back at the promises interactive whiteboards (IWBs) held for example. I think I still have a broken Promethean whiteboard pen in a drawer somewhere. I was sceptical from the off that one of the biggest selling points seemed to be something like: “You can get students up to move things around”. I like tech but as someone teaching 25+ hours per week (how the heck did I do that?) I could immediately see a lot of unnecessary faff. Most in my experience in schools and colleges suggest they are, at best, glorified projectors rarely fulfilling promise. Research I have seen on impact tends to be muted at best and studies in HE like this one (Benoit, 2022) suggest potential detrimental impacts. IWBs for me are emblematic of much of what I feel is often wrong with the way ed tech is purchased and used. Big companies selling big ideas to people in educational institutions with purchasing power and problems to solve but, crucially, at least one step removed from the teaching coal face. Nevertheless, because of my role at the time (‘ILT programme coordinator’, thank you very much) I did my damnedest to get colleagues using IWBs interactively and at all (I was going to say ‘effectively’) other than as a screen until I realised that it was a pointless endeavour. For most colleagues the IWB was a solution to a problem that didn’t exist.

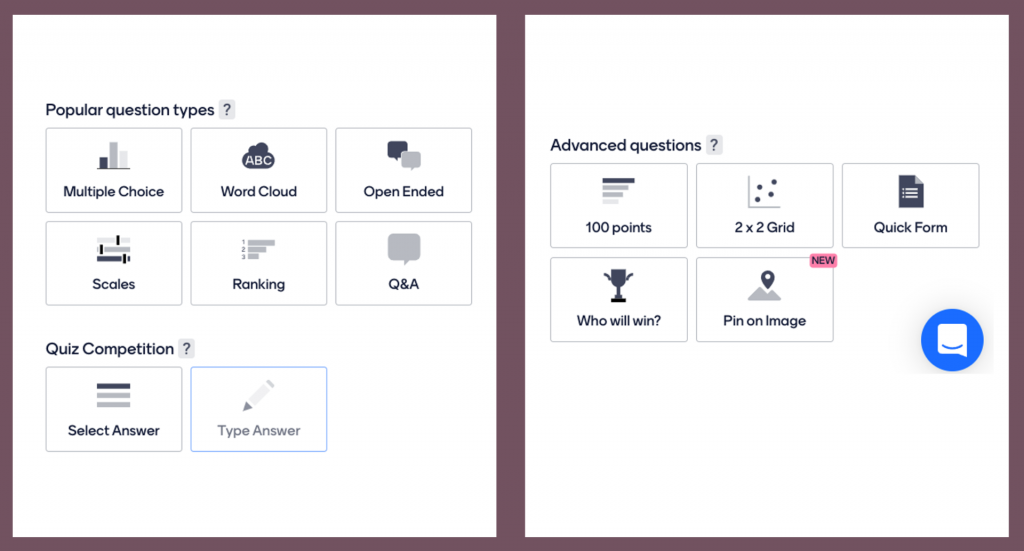

A problem that is better articulated is about the extent of engagement of students coupled with tendencies towards uni-directional teaching and passivity in large classes. One solution is ‘Clickers’. These have been kicking around since the 1960s in fact and foreshadowed modern student / audience response systems like Mentimeter, still sometimes referred to as clickers (probably by older generation types like me). Research was able to show improvements in engagement, enjoyment, academic improvement and useful intelligence for lecturing staff (see Kay and LeSage, 2009; Keough, 2012; Hedgcock and Rouwenhort, 2014) but the big problem was scalability. Enthusiasts could secure the necessary hardware, trial use with small groups of students and report positively on impact. I remember the gorgeous aluminium cases our media team held containing maybe 30 devices each. I also recall the form filling, the traipse to the other campus, the device registering and the laborious question authoring processes. My enthusiasm quickly waned and the shiny cases gathered dust on media room shelves. I expect there are plenty still doing so and many more with gadgets and gizmos that looked so cool and full of potential but quickly became redundant. BYOD (Bring your own device) and cloud-based alternatives changed all that of course. The key is not whether enthusiasts can get the right kit but whether very busy teachers can get it and the results versus effort balance sheet firmly favours the former. There are of course issues (socio-economic, data, confidentiality, and security to name a few!) with cloud-base BYOD solutions but the tech is never going to be of the overnight obsolete variety. This is why I am very nervous about big ticket kit purchases such as VR headsets or smart glasses and very sceptical about the claims made about the extent to which education in the near future will be virtual. Second Life’s second life might be a multi-million pound white elephant.

Finally, one of the big buzzes in the kinds of bubbles I live in on Twitter is about the ‘threat’ of AI. On the one hand you have the ‘kid in the sweetshop’ excitement of developers marvelling at AI text authoring and video making and on the other doom-mongering teachers frothing about what these (massively inflated, currently) affordances offer our cheating, conniving, untrustworthy youth. The argument goes that problems of plagiarism, collusion and supervillain levels of academic dishonesty will be exacerbated massively. The ed tech solution: More surveillance! More checking! Plagiarism detection! Remote proctoring! I just think we need to say ‘whoa!’ before committing ourselves to anything and see whether we might imagine things a little differently. Firstly, do existing systems (putting aside major ethical concerns) for, say, plagiarism detection, actually do what we imagine them to do? They can pick up poor academic practice but can they detect ‘intelligent’ reworking? The problem is: How will we know what someone has written themselves otherwise? But where is our global perspective on this? Where is our 21st century eye? Where is acknowledgement of existing tools used routinely by many? There are many ways to ‘stand on the shoulders of giants’ and different educational traditions value different ways to represent this. Remixes, mashups and sampling are a fundamental part of popular culture and the 20s zeitgeist. Could we not better embrace that reality and way of being? Spellcheckers and grammar checkers do a lot of the work that would have meant lower marks in the past but we use them now unthinkingly. Is it such a leap to imagine positive and open employment of new tools such as AI? Solutions to collusion in online exams offer more options it seems: 1. Scrap online exams and get them all back in huge halls or 2. [insert Mr Burns’ gif] employ remote proctoring. The issues centre on students’ abilities to 1. Look things up to make sure they have the correct answer and 2. Work together to ensure they have a correct answer. I find it really hard not see that as a good thing and an essential skill. I want people to have the right answer. If it is essential to find what any individual student knows, our starting point needs to be re-thinking the way we assess NOT looking for ed tech solutions so that we can carry on regardless. While we’re thinking about that we may also want to re-appraise the role new tech does and will likely play in the ways that we access and share information and do what we can to weave it in positively rather than go all King Canute.

Benoit, A. (2022) Investigating the Impact of Interactive Whiteboards in Higher Education. A Case Study. Journal of Learning Spaces

Hedgcock, W. and Rouwenhorst, R. (2014) ‘Clicking their way to success: using student response systems as a tool for feedback.’ Journal for Advancement of Marketing Education,

Kay, R. and LeSage, A. (2009) ‘Examining the benefits and challenges of using audience response systems: A review of the literature.’ Computers & Education

Keough, S. (2012) ‘Clickers in the Classroom: A Review and a Replication.’ Journal of Management Education