Last week members of the Digital Assessment team joined over 270 delegates at the International Assessment in HE (AHE) Conference 2023 in Manchester. Here are our team highlights:

Assessment Change: nobody said it would be easy – by Marieke Guy

I focused on sessions that looked at new and strategic approaches to assessment.

During Professor Naomi Winstone‘s, University of Surrey, early morning master class on debunking myths about assessment and feedback, we explored national survey questions from around the world. While assessment and feedback are often considered the Achilles’ heel of the education sector, interesting research by Buckley in 2021 shows that satisfaction levels remain relatively high, around 70-75%, it is just that we are comparing it against other areas, like comparing a trip to the dentist with a holiday. However, there are underlying issues with the questions themselves, as they tend to view academics as providers and students as consumers, hindering a true dialogue. Naomi emphasised the importance of focusing on the areas we can control: student feedback literacy, improving evaluative judgment, enhancing the utility of feedback, and addressing challenges such as anonymity and feedback doing ‘double duty’ (i.e. feedback being written for external examiners and quality assurance as well as students). You can see Naomi’s associated reference list.

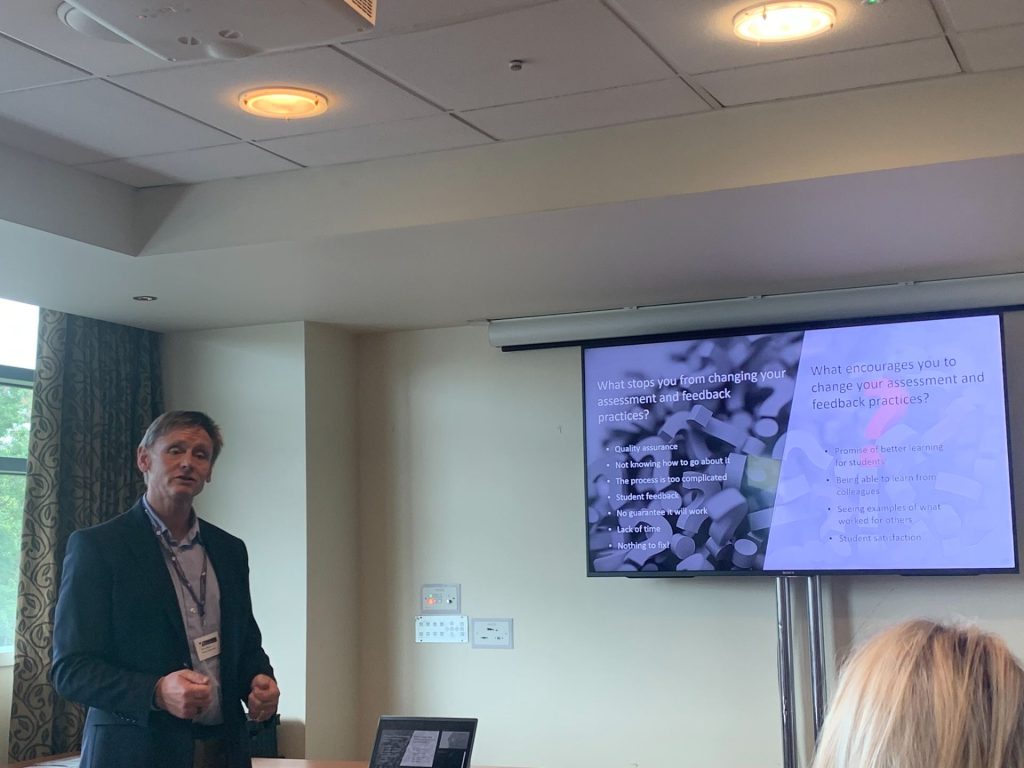

Professor Martyn Kingsbury, Director of Educational Development from Imperial delivered a highly useful session on encouraging institutional change through assessment and feedback. Despite investing a substantial amount of money (£90 million over 10 years) in a curriculum review process, they have encountered huge resistance to change and failed to reduce over assessment. Assessment closely tied to discipline views and the reluctance to abandon old assessments posed significant challenges. Imperial have tried both top-down and bottom-up approaches but achieved limited change through curriculum review. However, they have since made progress by establishing faculty champions education who would work 1 day a week on the project, and creating “The Anatomy of an Assessment” resource: 30 staff interviews, 42 videos, 18 case studies. Built like an Anatomy text book in that it takes the whole area and breaks it down, you can take a high level view or get down to the detail of what is appropriate. One of the most successful areas of the work was that the money was used in a competitive way and module teams had to bid for quantities to be used on assessment redesign – this meant they had a vested interest in being involved. In a separate session Leeds University presented their institution wide assessment project, with work focusing on programmatic and synoptic assessment, aiming for fairness, inclusivity, authenticity, and digital enablement. They also brought in 75 new lecturers to support the initiative.

Professor Sally Everett, Vice Dean (Education) from King’s College University shared her insights on assessment change. Initially confident about making change happen (while at Anglia Ruskin she was involved with improving the NSS from 55% to 70+), she realised that assessment is actually a wicked problem, and change is incredibly difficult. To inform her plenary she engaged assessment experts in group focus groups and round table chats, observing that assessment conversations often centre on individuals and evoke strong emotions, using war and resistance metaphors. Sally highlighted the importance of addressing attitude, anxiety, and time affluence. The suggestions included involving alumni, employers, and academic staff in collaborative discussions, providing subject-specific guidance, training PGTAs in effective marking, conducting myth-busting sessions, and fostering change champions.

Other notable sessions I attended included:

- Mini-plenary from Dr Milena Marinkova and Joy Robbinson from Leeds University on unessays, where students select their own topics and present them in their preferred formats.

- Dr Miriam Frith from the University of Manchester presented on the optionality project involving UCL (AHE Optionality Slides)

Thanks #Assessmentconf23 @AheConference for the opportunity to #keynote present our study on #optionality / #studentchoice across four #HigherEducation institutions @OfficialUoM @UniOfYork @ucl @imperialcollege @QAAtweets https://t.co/t5lf1uFT96 pic.twitter.com/ljUKuqJ1JP

— Dr Miriam Firth (@DrMiriamFirth) June 23, 2023

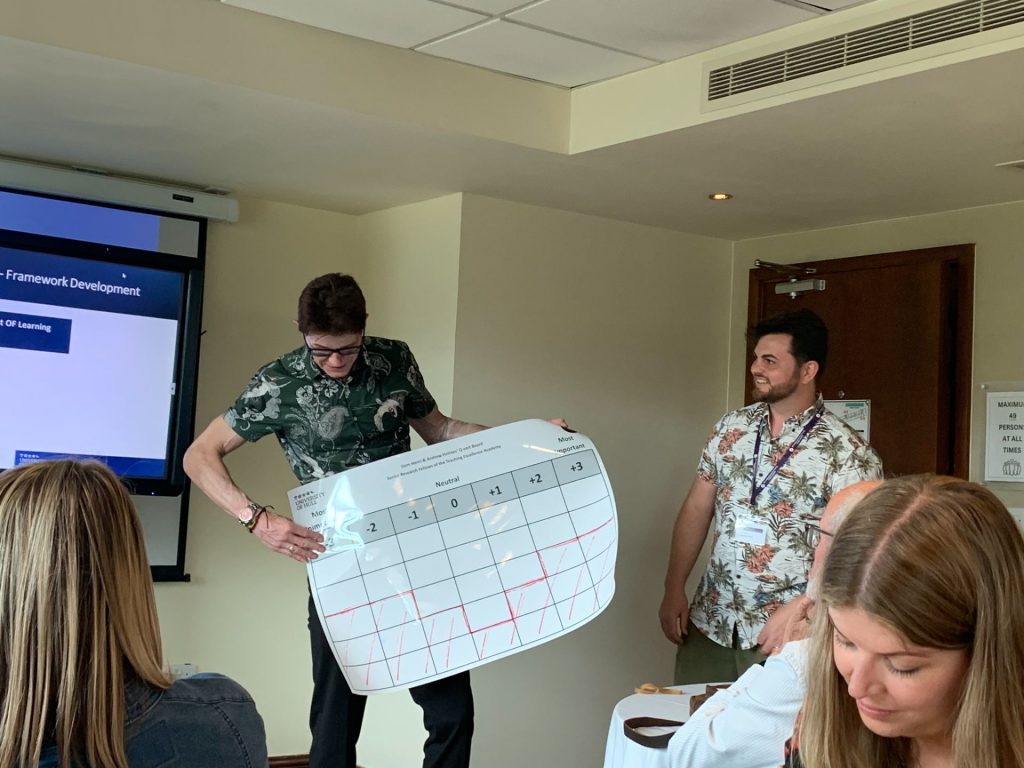

- A team from Hull university, where they have moved to competencies model, not Learning Outcomes, demonstrated their Q-sort board. This is a ‘Forced likert board’ that they are using in assessment design sessions.

- A workshop session on EAT Framework (Enhancing Assessment and Feedback Techniques) led by Professor Stephen Rutherford (Cardiff University) and Dr Sheila Amici-Dargan (University of Bristol) offered ideas for improving assessment literacy and design across institutions.

- Professor Alex Steel from UNSW in Australia and Ishan Kolhatkar from Inspera shared insights on the implementation of the Inspera assessment platform at UNSW and compared their challenges with the 16-point adoption model written by Liu, Geertshuis and Grainger (Understanding academics’ adoption of learning technologies: A systematic review)

- The University of Manchester team (Dr Illiada Eleftheriou and Ajmal Mubarik) discussed their AI code of conduct and AI ethical framework, both co-created with students and applied in the “AI: Robot Overlord, Replacement, or Colleague” module.

- Dr Liesbeth Baartman from Utrecht University of Applied Sciences provided guidance on programmatic assessment and explained how it works, categorising decisions as low-stakes or high-stakes and tracking data points in a portfolio. Continuous feedback is provided by teachers, fellow students, and clients, indicating a positive approach. The team also mentioned their professional learning community dedicated to program-level assessment.

Socially inclusive assessment – by Isobel Bowditch

It’s hard to make a call amongst so many great AHE conference sessions but a standout for me was ‘Assessment for inclusion: can we mainstream equity in assessment design? Can we afford not to? with Rola Ajjawi, David Boud, Phil Dawson, Juuso Henrik Nieminen. The focus was on social inclusion i.e. thinking of students in terms of categories of learners but as people who are citizens of the world , drawing on the social justice in education work of Joanna Tai, Jan Macarthur and others.

Rather than trying to address the multiple layers of difference in our educational communities (an impossible feat?), the conversation was underpinned by values of relationality (how assessment design can build relations), representation (involving diverse student voices in assessment design and evaluation) and critical conversations (identifying gaps in provision and the values that we want to inform our assessment methods)

Coming at it from a quality angle, Phil Dawson made a pertinent and thought-provoking point that increased security measures can actually interfere with validity of our assessments through exclusion. For example, invigilated or proctored exams can disadvantage students with anxiety or with neurodiverse gaze or behaviour patterns. This means that our ability to assess particular outcomes in all students is compromised because we have put barriers in the way.

Interestingly, when Dawson asked the audience whether we had participated in a moderating exercise to establish reliability we all raised our hands. When he asked how many a validity had done exercises – no one had.

This made me reflect on the increase in demand for more assessment security measures such as in-person invigilated exams, lockdown browsers and remote proctoring that we have seen post COVID and now again in response to AI (at least until we work out what to do about it!).

At the same time there has been a huge increase in requests for reasonable adjustments from students with different needs. This seems to chime with Dawson’s reading that emphasizing security measures, however important they are, risks sidelining some of our other core assessment principles such as equity and inclusion.

See the OA book associated with this session: Assessment for inclusion

Activating student’s inner feedback – by Isobel Bowditch

It was great to see David Nicol’s work on inner feedback gaining so much traction (see Unlocking the Power of Inner Feedback), at the conference, something I’ve been interested in following up for some time. So to see this covered in various ways throughout the two days was a treat. The premise is that students are always generating inner feedback by making comparisons between their own thinking/work and that of others – whether that be comments from peers, lectures, articles and so on. David Nicol’s approach is to make this process a conscious and directed activity by providing students with material to compare their own work against in the classroom. These comparators can be in any format – video, Blog, newspaper article etc – against which students evaluate their own work. Is there anything missing? Anything they have contributed that the comparitor hasnt? What have they learned in the process? They then apply what they have learned in their own work – identifying similarities, differences, what they have done to revise their work and so on. The process is scaffolded through providing comparison guidelines and starts with self directed feedback, moves onto peer feedback and the tutor only provides feedback at the end of the process. Interestingly the feedback that tutors gave at the end often reaffirmed the self and peer feedback.

Kay Sambell, Linda Graham and Fay Cavagin (Cumbria University) talked about their inspiring action research project based on this method with first year Childhood Studies cohort. The findings were that students reported feeling knowledgable, confident, enjoying assignments and developed a sense of belonging both at university and in their discipline and professional context.

Suzanne McCallum (Glasgow University) discussed how she has used this with entry level students in their first year. From the very start it helps develops students’ critical thinking skills and how it can be used flexibly either in short timeframes or longer periods.

Willie McGuire (Glasgow University) gave 7 minute keynote talking about the using this comparative feedback process to help students draft work by the use of exemplars of different qualities. He sees this process as a ‘catalyst for action’ ( Glasgow university’s education strategy is informed by an Active Blended Learning approach). The trick is in getting effective comparators – so these need to be carefully selected.

Nicol’s approach would be a valuable resource for UCL staff as seems so empowering for students and provides a mechanism to put into practice David Boud’s (and others) proposition that the focus of feedback needs to be on what students do rather than what we (educators) provide. At the same time by all accounts, it also reduces the workload for staff (at least after set up). A win win!