Before the summer the Digital Assessment Team had a mini-away day to allow us to look back over the previous academic year (2022/23) and forward to the next academic year (2023/4).

The 4 Ls

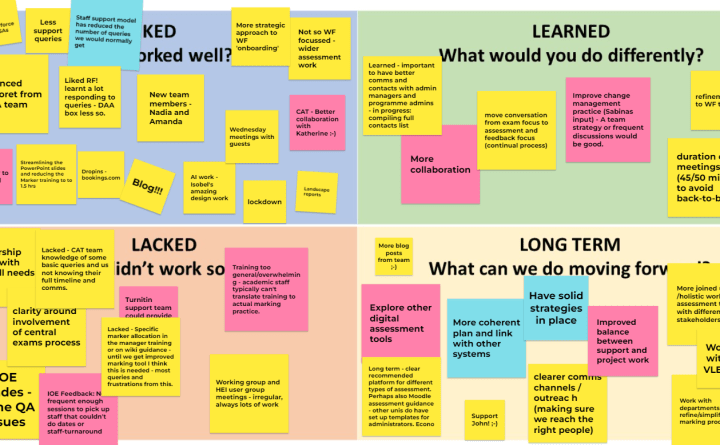

We started off with a with a Liked, Learned, Lacked and Longed for (or Long-term) retrospective exercise which allowed us to work through some of the issues and potentials areas for improvement. Here are a few of our choicest observations:

Liked – what worked well?

- Staff support model – Clarity around staff support model and Remedy force queries, enhanced support from the Digital Education Support Analysts (DESA) team.

- Training – Consistency in training delivery and frequency, drop-in sessions, streamlining marker training slides and reducing training time.

- Onboarding – More strategic approach to Wiseflow onboarding.

- Wider focus – Shifting focus to wider assessment work, beyond just Wiseflow. AI work, specifically assessment design resources.

- Collaboration – Improved collaboration with the Central Assessment Team and successful lockdown browser pilot.

Learned – What would you do differently?

- Comms – Need a comms strategy to map out processes

- Increased collaboration is necessary.

- Continued shift – Take conversations from an exam focus to an assessment and feedback focus. Improvement of change management practices.

- Training – Continued refinements to Wiseflow training.

- Meetings – Limiting meeting durations to 45/50 minutes to avoid back-to-back scheduling.

Lacked – What didn’t work so well?

- CAT – Partnership model with the Central Assessment Team is a good start. We will want to improve CAT team knowledge on some queries. Still insufficient clarity on central assessment involvement and exams processes.

- Guidance – Lack of specific guidance on marker allocation in manager training and the wiki guidance. Quality assurance issues in marking processes.

- Working group – Irregularity and lack of focus in working group and HEI user group meetings.

Long-term – What can we do moving forward?

- Support – Move away from immediate responses for staff (as we can spread ourselves too thinly) to ensure knowledge is more widely spread.

- Project work – Achieve an improved balance between support and project work. Create availability for project work.

- Other platforms – Fill gaps in knowledge and support for other platforms and explore additional digital assessment packages. Collaborate further with the VLE team.

- Strategy – Develop a more coherent plan.

- Recommendations – Provide clear guidance for different types of assessments, including Moodle.

- Marking – Collaborate with departments to refine and simplify marking processes.

Training

This lead us to focus in on our work on training. Over the past 2 years we have delivered:

- 100+ training sessions delivered (covering 3 main roles – Authoring, Managing & Marking)

- Training to 600+ staff

- Includes Bespoke training delivered for CLIE, Laws, Philosophy, IRDR, Biochemistry

- Additional training to meet needs: MCQ & Rubric masterclass

- Weekly drop-in sessions in 2022, restarting in 2023 for main exam period.

- 2 x F2F Manager training sessions in 2023

- Lots of ad-hoc training!

This level of training has been fairly intense and we are looking to revise it for the forthcoming year in line with our onboarding strategy for 2023/24. The main ideas behind the strategy are covered in the table below but there will be work to communicate these ideas on the Recommendations and steps for departments using AssessmentUCL page and the proposed AssessmentUCL Blueprints.

| AssessmentUCL should be used for: | AssessmentUCL should not be used for: |

| Central assessments – online remote assessments | Central assessments with complex set up e.g. some FlowMultis. Pick this up through CAT collected info |

| Existing departments / departmental assessments that have already moved to AssessmentUCL and are working well | New departmental assessments where there is no benefit in moving and/or does not have robust admin |

| New departmental assessments with significant issues and where AssessmentUCL can help | New departmental assessments that digress from the Blueprints or will result in significant workarounds |

| New entire departments with robust admin support level – partnership model with clear contract and meeting schedule | New departmental assessments with little or unstable admin support |

| New assessments suitable for platform (screening process required) |

At the end of the away day we spent some time looking at other work we would like to carry out over the forthcoming academic year.

- Onboarding strategy 2023-24 – process map, clarity for onboarding, improve recommendations page, possible use contract, list of meetings and outcomes, links to resources from other departmental onboardings

- Comms strategy – map out processes, define comms types, define methods/places

- CAT Information – Looking at what information is submitted on central assessments? How is this used? Guidance information

- CAT support – Increasing CAT support for central assessments e.g. sharing guidance, marker allocation info etc.

- Digital Assessment gap analysis – includes understanding process and platforms, identifying gaps. AI detection, Moodle links. AI work and assessment redesign.

- Digital assessment matrix/diagnostic tool – benefits and challenges of each UCL platform, compromises required

- Blueprints revisited

- …

- Work to not take place – new marking tool training, wiki redesign, some onboarding

Some of this work will be picked up by the Assessment and Feedback product team, for example a Business Case has been approved on the Future of Assessment which will involve an audit of existing tools and processes, a gap analysis and possible proof of concepts of new tools.