Earlier this week, King’s Academy and UCL’s Digital Assessment Team joined forces to host a one-day assessment event, featuring Professor Phillip Dawson, Associate Director of the Centre for Research in Assessment and Digital Learning (CRADLE) at Deakin University in Australia. Phill is a renowned figure in the field of education, particularly in feedback literacy and academic integrity. The event, held at Bush House on King’s College London Strand Campus, provided a platform for educators and professional services staff to explore the realms of feedback literacy and future-authentic assessment.

Morning Session: Exploring Feedback Literacy

The day kicked off with a warm welcome from Professor Adam Fagan, Vice President (Education and Student Success) at King’s College London, followed by an exstensive interactive presentation led by Phill. In the session the 100+ participants had the opportunity to delve into their own responses to feedback and thoughts on feedback delivery. It proved to be an incredibly valuable session, offering practical tips and insights. Here are some key takeaways:

- Feedback often goes to waste, with academics writing extensive feedback that is rarely read or acted upon. This can be disheartening for both parties involved – as one audience member explained it can be ‘crushing’. To optimise feedback it is important to be strategic. Instead of providing feedback at the end of a module with no room for improvement, consider offering it during early formative assessments to support development.

- Shifting the focus to what students do with feedback is crucial. Feedback should be more work for recipient than the donor” (William, 2011). Encouraging students to actively engage with feedback, ask questions, and plan how to use it effectively is an important part of literacy. Teaching should be viewed through the lens of what the student does, rather than what the teacher does or what the student is – emphasising student agency and engagement. Useful ideas on the CRADLE Feedback for learning site.

- Rejecting feedback is a valid choice. Trust in the feedback provider and the student’s own judgment are important. Student uptake of feedback can be an indicator of feedback literacy. Overusing the term ‘feedback’ may dilute its significance, so it’s important to ensure that students actively recognise and engage with the feedback they receive.

- As part of the assessment process some academics ask students about what feedback they would like to receive: evaluation (this is how well you did), coaching (how you can improve), and appreciation (I see you). Understanding student’s expectations and aligning them with the feedback they receive is crucial. Dissatisfaction can arise when there is a mismatch between these factors.

- Feedback practices in academia may not always align with real-world practices. Exploring authentic feedback and aligning assessment with real-world scenarios can enhance the learning experience.

- Developing the skill of giving feedback requires training, peer review, practice, and self-reflection.

- Feedback has a ‘Daversity problem’ – too many Davids! Carless and Boud to name two. Dawson actually means – David’s son.

- There are differences between UK and Australian higher education systems, particularly in terms of scale and regulation – at some universities people work full-time just doing marking and feedback. Anonymous marking isn’t usual practice in Australia. Approaches like ipsative audio feedback require a deeper understanding of students and marking accordingly.

Some of these ideas were explored at last year’s Assessment in Higher Education conference (covered in this blog post: AHE conference and the joy of assessment). Phill will be appearing as one of this year’s AHE Conference plenary speakers later on this month.

Afternoon Session: Don’t Fear the Robot: Future-authentic Assessment and Generative Artificial Intelligence

In the afternoon session, after a networking lunch, Phill delved into the realm of academic integrity and the evolving landscape of assessment. The content very much aligned with some of the thinking going on in the UCL AI experts group, which was reassuring. Here are the key insights from this session:

- There are a lot of interesting cheating case studies, from people cheating in ethics exams to famous bridges that fell down during an earthquake being designed by cheats. The key point is that assessment really matters. We want to ensure degree validity.

- Generative AI can do a lot of what we currently assess. However using generative AI is not inherently cheating; the perception of cheating is socially constructed. Banning its use is not a feasible solution. A ban is a restriction. Restrictions that don’t work are theatre. In Australia they have banned over 200 contract cheating sites, unfortunately Chegg isn’t on the list. AI detection doesn’t work

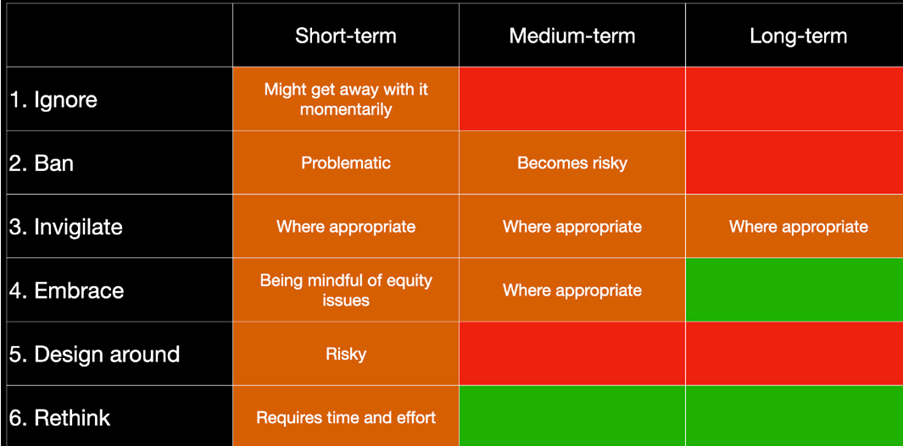

- Long-term change processes are more effective than quick hacks. What is easy in the short-term won’t work in the long term. See Assessment redesign for generative AI: A taxonomy of options and their viability (below) for a matrix to explain this.

- Assessment needs to prepare students for their future, not our past.

- There may be some areas where we need to do more supervised assessment but we will want a broad spectrum of invigilated activities that will include oral assignments and vivas.

- One approach is to look at assessment through a validity lens. “Don’t be scared of validity! Generative AI is a secondary concern to validity. Restrictions that can’t be enforced hurt validity. Exclusion hurts validity. Cheating hurts validity.”

- Authentic assessment is not a solution to cheating, but it can enhance relevance and student engagement. Authenticity should encompass various future scenarios, including techno-utopia, techno-dystopia, and even collapse or catastrophe.

- Students should learn how to navigate assessments both with and without the assistance of generative AI. Scaffolding and reverse-scaffolding (for example you can use AI when certain skills have been mastered) can be employed to support students’ development and decision-making.